Dong Gong

Continual Learning with Sparse Neural Networks

Paper

Learning Bayesian Sparse Networks with Full Experience Replay for Continual Learning

Qingsen Yan*, Dong Gong*, Yuhang Liu, Anton van den Hengel, Javen Qinfeng Shi (*Equal Contribution)

In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 109-118. 2022.

[Paper]

[arXiv (to-be-updated)]

[Code (to-be-uploaded)]

|

Abstract

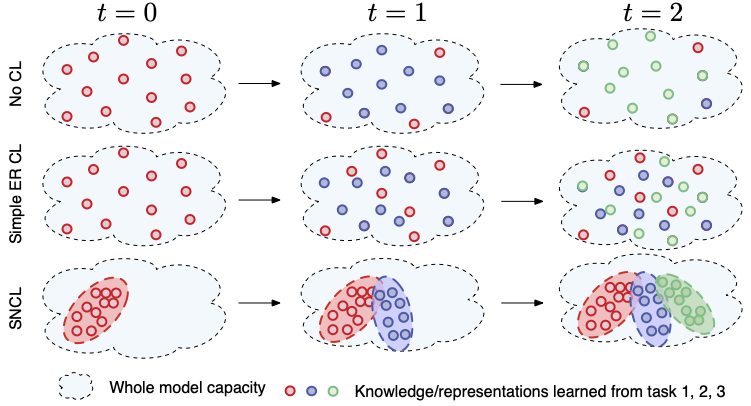

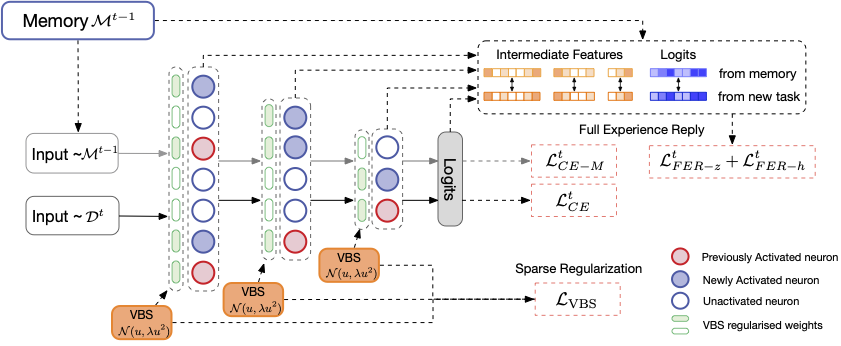

Continual Learning (CL) methods aim to enable machine learning models to learn new tasks without catastrophic forgetting of those that have been previously mastered. Existing CL approaches often keep a buffer of previously-seen samples, perform knowledge distillation, or use regularization techniques towards this goal. Despite their performance, they still suffer from interference across tasks which leads to catastrophic forgetting. To ameliorate this problem, we propose to only activate and select sparse neurons for learning current and past tasks at any stage. More parameters space and model capacity can thus be reserved for the future tasks. This minimizes the interference between parameters for different tasks. To do so, we propose a Sparse neural Network for Continual Learning (SNCL), which employs variational Bayesian sparsity priors on the activations of the neurons in all layers. Full Experience Replay (FER) provides effective supervision in learning the sparse activations of the neurons in different layers. A loss-aware reservoir-sampling strategy is developed to maintain the memory buffer. The proposed method is agnostic as to the network structures and the task boundaries. Experiments on different datasets show that SNCL achieves state-of-the-art result for mitigating forgetting.

Overview of the Model

|

Visualization of the trained model

|

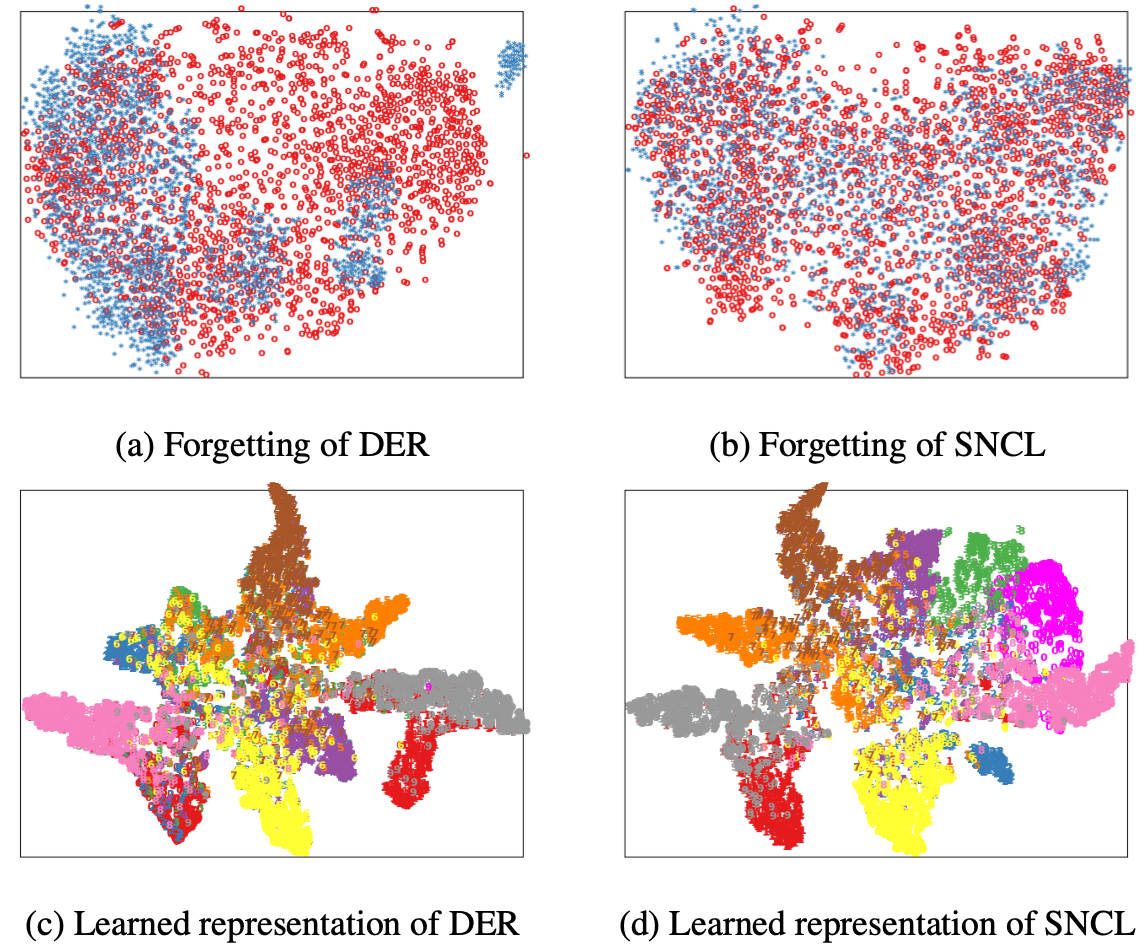

Visualizing t-SNE embeddings on S-CIFAR-10.

(a) and (b) analyze the forgetting issue. Blue points are from a task after learning other tasks in CL; Red points are obtained from a single task no forgetting training as reference.

(c) and (d) show the representations of all the classes after CL.

Our Related Works

Variational Bayesian Dropout with a Hierarchical Prior

Yuhang Liu, Wenyong Dong, Lei Zhang, Dong Gong, Qinfeng Shi

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

[arXiv] [Project]

Memorizing Normality to Detect Anomaly: Memory-augmented Deep Autoencoder for Unsupervised Anomaly Detection

Dong Gong, Lingqiao Liu, Vuong Le, Budhaditya Saha, Moussa Reda Mansour, Svetha Venkatesh, Anton van den Hengel

In IEEE International Conference on Computer Vision (ICCV), 2019.

[arXiv] [Project] [Github]