Dong Gong

Memorizing Normality to Detect Anomaly: Memory-augmented Deep Autoencoder (MemAE) for Unsupervised Anomaly Detection

Dong Gong, Lingqiao Liu, Vuong Le, Budhaditya Saha, Moussa Reda Mansour, Svetha Venkatesh, Anton van den Hengel

|

Abstract

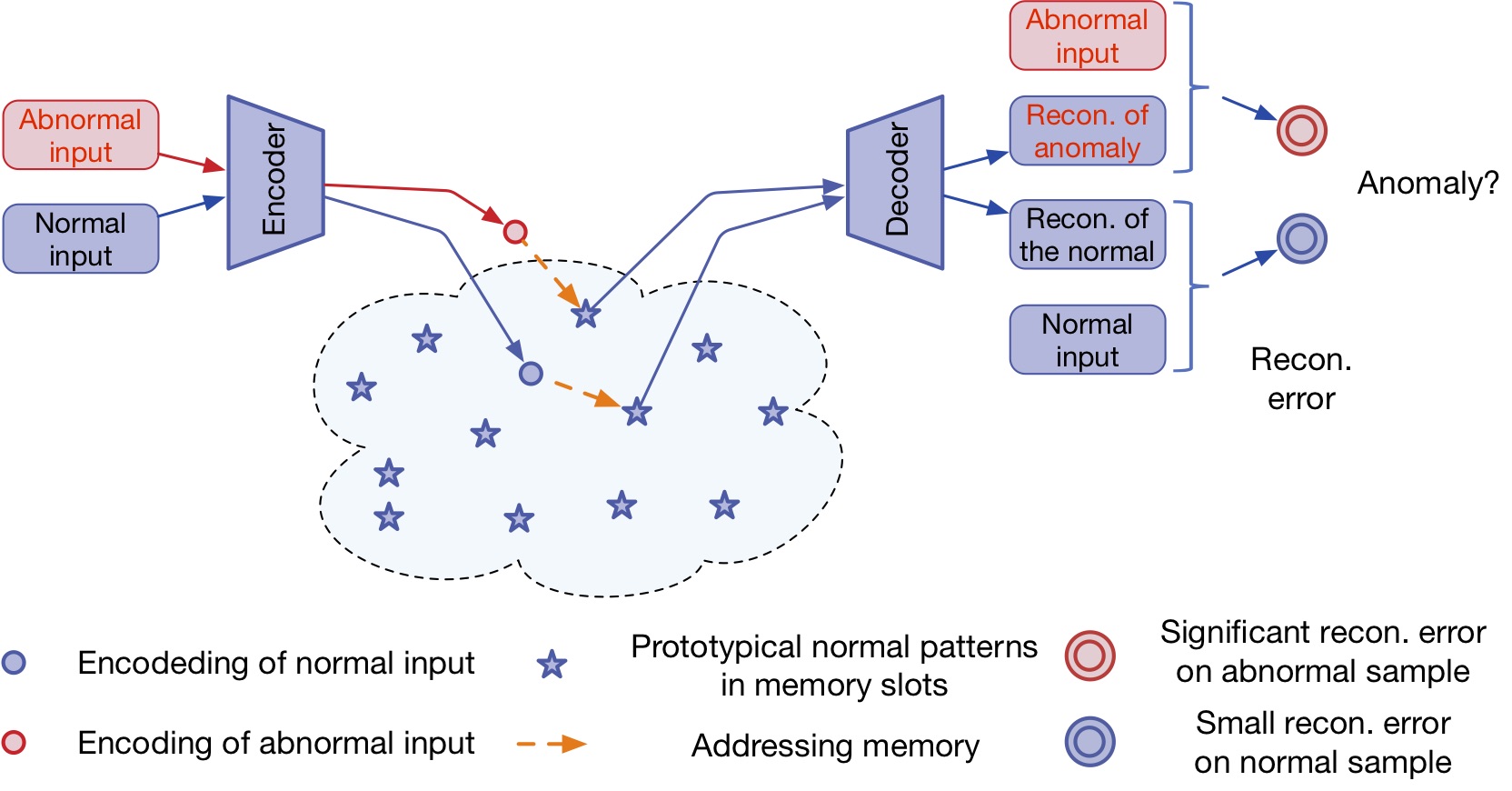

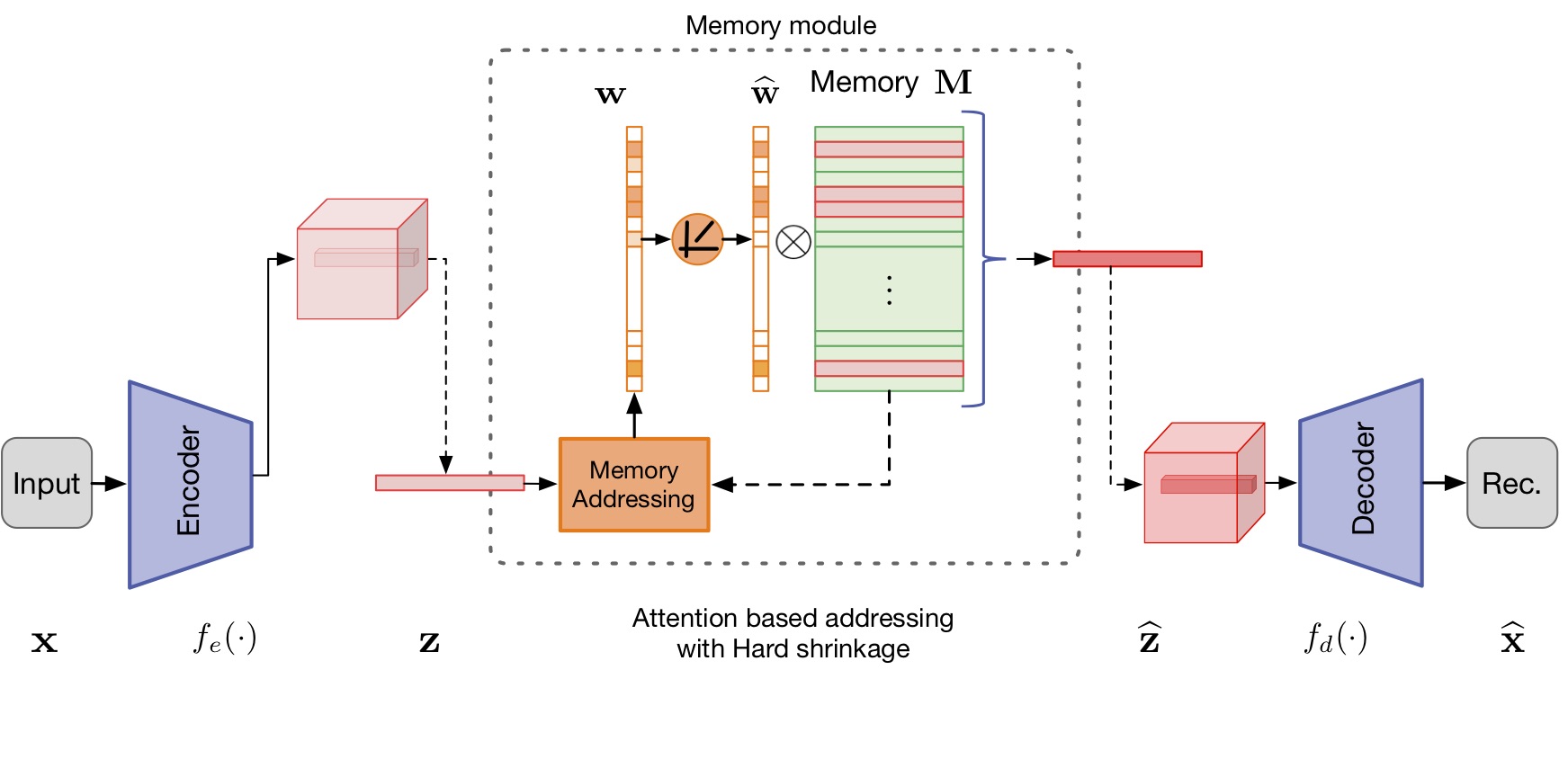

Deep autoencoder has been extensively used for anomaly detection. Training on the normal data, the autoencoder is expected to produce higher reconstruction error for the abnormal inputs than the normal ones, which is adopted as a criterion for identifying anomalies. However, this assumption does not always hold in practice. It has been observed that sometimes the autoencoder “generalizes” so well that it can also reconstruct anomalies well, leading to the miss detection of anomalies. To mitigate this drawback for autoencoder based anomaly detector, we propose to augment the autoencoder with a memory module and develop an improved autoencoder called memory-augmented autoencoder, i.e. MemAE. Given an input, MemAE firstly obtains the encoding from the encoder and then uses it as a query to retrieve the most relevant memory items for reconstruction. At the training stage, the memory contents are updated and are encouraged to represent the prototypical elements of the normal data. At the test stage, the learned memory will be fixed, and the reconstruction is obtained from a few selected memory records of the normal data. The reconstruction will thus tend to be close to a normal sample. Thus the reconstructed errors on anomalies will be strengthened for anomaly detection. MemAE is free of assumptions on the data type and thus general to be applied to different tasks. Experiments on various datasets prove the excellent generalization and high effectiveness of the proposed MemAE.

Network

|

Visualizing How the Memory Works (with MNIST)

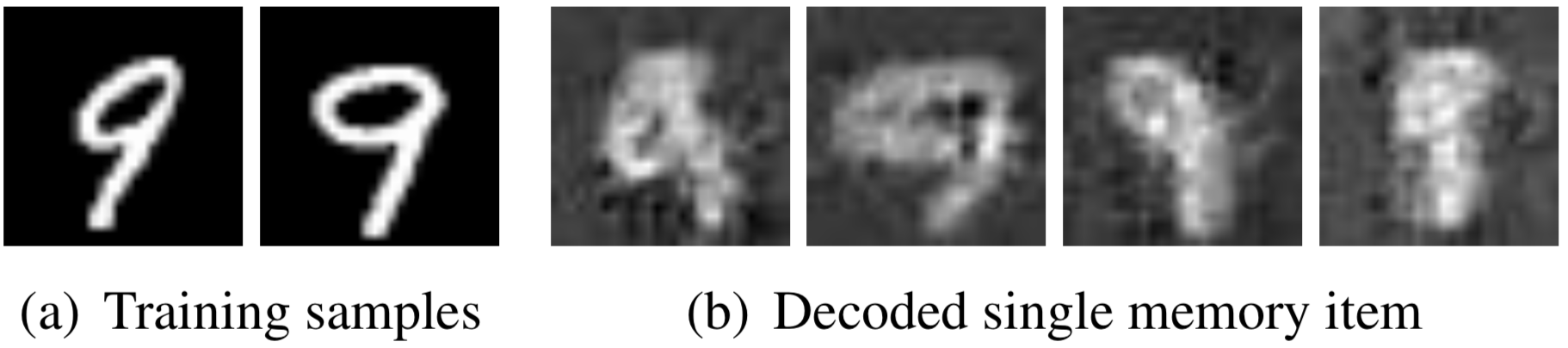

What the memory learns.

|

Memory slots learned on MNIST by treating “9” as normal data. We randomly select a single memory item and perform decoding. The decoded single memory slot in (b) appears as a prototypical pattern of the normal samples.

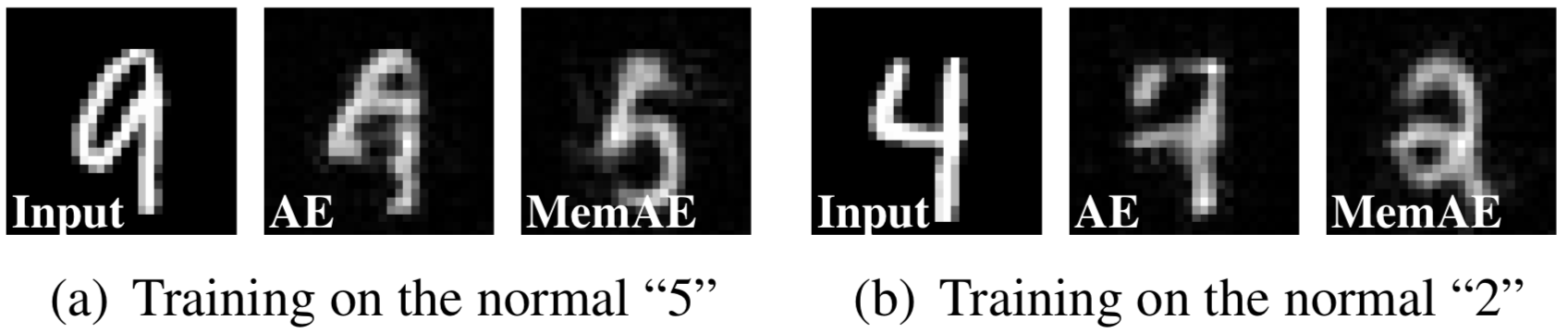

How the memory module augments reconstruction.

|

Reconstruction results of AE and MemAE on MNIST. (a) The models are trained on “5”. The input is an image of “9”. (b) The models are trained on “2”. The input is an image of “4”. The MemAE retrieves the normal memory items for reconstructions and obtains the results significantly different from the input anomalies.

Paper

Dong Gong, Lingqiao Liu, Vuong Le, Budhaditya Saha, Moussa Reda Mansour, Svetha Venkatesh, Anton van den Hengel. Memorizing Normality to Detect Anomaly: Memory-augmented Deep Autoencoder for Unsupervised Anomaly Detection. arXiv preprint arXiv:1904.02639, 2019.

Our Related Works

Learning and Memorizing Representative Prototypes for 3D Point Cloud Semantic and Instance Segmentation

Tong He*, Dong Gong*, Zhi Tian, Chunhua Shen (* Equal contribution)

In European Conference on Computer Vision (ECCV), 2020.

[arXiv] [Code]

Memory-augmented Dynamic Neural Relational Inference

Dong Gong, Zhen Zhang, Javen Shi, Anton van den Hengel

In IEEE International Conference on Computer Vision (ICCV), 2021. (Accepted)

Bayesian Sparse Networks with Full Experience Replay for Continual Learning

Dong Gong*, Qingsen Yan*, Yuhang Liu, Anton van den Hengel, Javen Qinfeng Shi ( Equal contribution)

In CVPR, 2022.